Explainable AI for the Classification of Brain MRIs ECAI 2025 Workshop on EXPLIMED

Abstract

Machine learning applied to medical imaging suffers from a lack of trust due to the opacity of AI models and the absence of clear explanations for their outcomes. Explainable AI (XAI) has therefore become a crucial research focus, particularly in high-stakes domains such as healthcare. However, many AI models—both research-based and commercial—are inaccessible for white-box analysis, which requires internal model access. This underscores the need for black-box explainability tools tailored for medical applications. While several such tools exist for general images, their effectiveness in medical imaging remains largely unexplored.

Methods

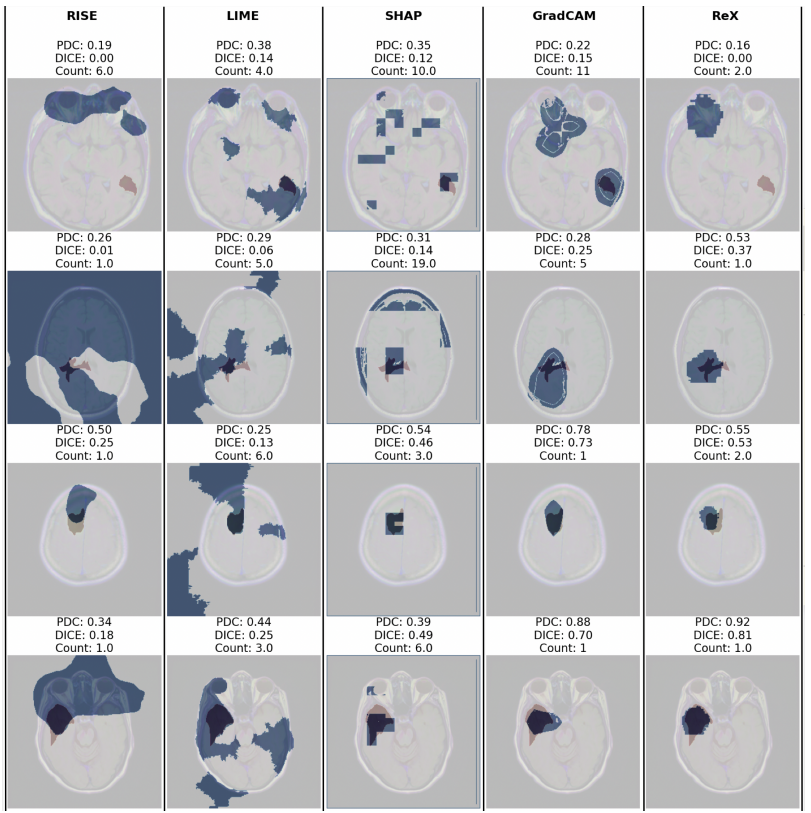

We utilized a publicly available dataset of brain MRI images and a classification model trained to distinguish between cancerous and non-cancerous slices. We evaluated several black-box explainability tools, including LIME, RISE, Integrated Gradients (IG), SHAP, and ReX, as well as Grad-CAM as a white-box baseline. Our assessment employed widely accepted evaluation metrics such as the Dice Coefficient, Hausdorff Distance, and Jaccard Index. Additionally, we introduced a novel Penalized Dice Coefficient that integrates multiple metrics to provide a more comprehensive evaluation of explanation quality.

Results

Our results show that ReX achieves a Dice Coefficient of 0.42±0.20, outperforming other black-box methods and demonstrating performance comparable to Grad-CAM (Dice Coefficient = 0.33±0.22). A qualitative analysis further highlights key failure modes in existing XAI methods, emphasizing the importance of robust explainability tools in medical applications.

Conclusions

All the XAI tools evaluated in this study exhibit limitations when applied to tumor detection in MRIs. However, ReX consistently outperforms alternative black-box methods and achieves results on par with Grad-CAM, a significant finding given that the latter relies on white-box access. These findings underscore the potential of ReX as a viable XAI tool for medical imaging applications.

How to cite this work:

@misc{blake2024explainable, title = {Explainable AI for the Classification of Brain MRIs}, author = {Nathan Blake and David Kelly and Santiago Calderón-Peña and Akchunya Chanchal and Hana Chockler}, year = {2024}, month = {06}, doi = {10.21203/rs.3.rs-4619245/v1} }